Your 2026 Resolution: Add Context to Your Data (Before It Breaks You)

Last week I sat in an executive review where two teams spent forty minutes arguing about "active users." Not about strategy. Not about growth. About what the number meant.

One team counted anyone who logged in. The other excluded users who bounced in under 30 seconds. Neither knew which experiment flags were active when the data was pulled. The dashboard just showed a number. No definition. No lineage. No context.

This happens constantly. And it's about to get significantly worse.

Gartner predicts that 60% of AI projects will be abandoned by 2026 because organizations lack "AI-ready data." Not because models failed. Not because compute was too expensive. Because the data traveling through these systems carries no meaning beyond the raw values.

The models can't tell the difference between a deprecated pricing page and current policy. They can't distinguish a test account from a real customer. They retrieve answers confidently, cite sources correctly, and still get everything wrong.

This is the year we stop treating context as optional documentation and start treating it as infrastructure.

The Context Engineering Pivot

Something shifted in 2025. The industry stopped talking about "prompt engineering" and started talking about "context engineering."

Andrej Karpathy called it "the delicate art and science of filling the context window with just the right information for each step." MIT Technology Review documented the transition from "vibe coding" to systematic context management. Google's December release of their Agent Development Kit was entirely focused on context architecture.

The terminology change matters. "Prompt" implies a single instruction you craft carefully. "Context" implies an entire information environment you engineer deliberately.

And it turns out most organizations have been engineering that environment with all the care of a teenager cleaning their room by shoving everything under the bed.

David Lanstein, CEO of Atolio, put it bluntly in IBM's 2026 predictions: "The solution isn't bigger models, but smarter data. True value will come from feeding models high-quality, permission-aware structured data to generate intelligent, relevant and trustworthy answers."

The race for bigger context windows missed the point. A 200K token context window filled with undifferentiated garbage produces undifferentiated garbage outputs, just with more confident citations.

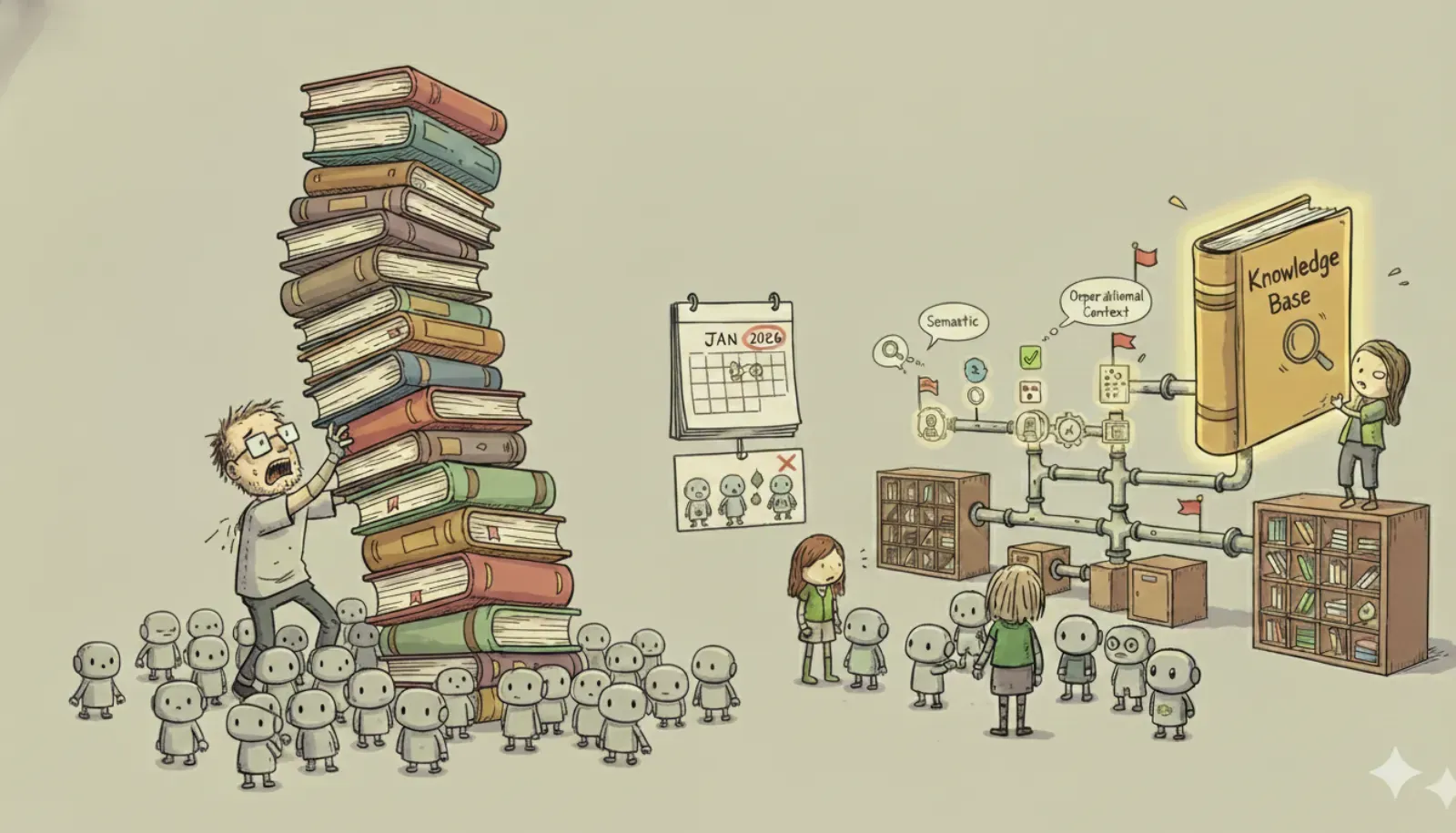

What Context Actually Means

When I talk about context, I don't mean adding a few comments to your SQL. I mean four distinct layers that most data systems ignore entirely.

Semantic context is what a value actually represents. Not just "this column is called revenue" but "this is recognized revenue under ASC 606, calculated monthly, excluding deferred amounts, as defined by the finance team's Q3 2025 policy update." When the definition changes, the context changes with it. When someone queries the data six months from now, they see what it meant then, not what it means today.

Operational context is the health and provenance of the data at query time. Is this number fresh? Did the upstream pipeline fail overnight? Is there an active incident affecting the source system? A dashboard that shows revenue without showing "by the way, the payment processor had a three-hour outage last night" is lying by omission.

Experimental context is which flags and tests were active when the data was generated. Your MAU number is meaningless if you don't know that 40% of users were in an onboarding experiment that changed the activation flow. The number isn't wrong. It's just uninterpretable without the experiment metadata traveling alongside it.

Human context is ownership and decision history. Who defined this metric? What decisions have been made based on it? Where's the design doc? When someone has a question, they shouldn't have to play archeologist in Slack to figure out who to ask.

Most data systems capture maybe one of these. Usually the semantic layer, poorly, in a data catalog that nobody updates and fewer people read.

The Kubernetes Lesson I Should Have Learned Sooner

When I was the first product manager on Kubernetes at Google, we thought we'd solved the orchestration problem. Pods, services, deployments. State reconciliation. Declarative configuration. Ship your containers and let the scheduler figure out the rest.

What we hadn't solved was context.

A customer came to us wanting to run a global footprint of clusters, one per region, with synchronized jobs. Low-latency application, workloads coordinated across continents. We had an internal project called "Ubernetes" that was supposed to handle this, but the complexity was brutal. We ended up helping them build a custom solution.

The problem wasn't deploying the workloads. GitOps handles that fine now. The problem was that when data crossed cluster boundaries, all the context about that data evaporated. Each cluster was internally consistent. The global system was broken because nobody knew what the data meant in aggregate.

I've watched the same pattern repeat across every data problem I've worked on since. The compute orchestration is largely solved. The data orchestration is still a mess, and it's a mess because context doesn't travel with the data. This is actually why I'm writing a book on exactly this: the hidden complexity of data preparation that causes 80% of AI projects to fail.

Why RAG Doesn't Fix This

The popular assumption has been that Retrieval-Augmented Generation solves the context problem. Point your model at your documents, let it retrieve what it needs, problem solved.

InfoWorld's analysis last week explains why this breaks at scale: "RAG breaks at scale because organizations treat it like a feature of LLMs rather than a platform discipline. Models generate confidently incorrect answers because the retrieval layer returns ambiguous or outdated knowledge."

The failure mode is insidious. RAG with good retrieval but no context governance produces what I've started calling "hallucination with citations." The model cites a real document. The citation is accurate. The document is from 2023 and contradicts current policy. The answer is wrong, but it looks impeccably sourced.

CX Today reported on exactly this pattern: "If the knowledge base is outdated, RAG just retrieves the wrong answer faster. If content is unstructured, like PDFs, duplicate docs, or inconsistent schemas, the model struggles to pull reliable context."

The problem isn't retrieval. The problem is that the documents themselves carry no context about their validity, scope, or temporal bounds. A PDF is just a PDF. It doesn't know that it was superseded by a newer version, that it only applies to EMEA customers, or that the pricing section was invalidated by a board decision last quarter.

When VentureBeat declared "RAG is dead" in their 2026 predictions, they were being provocative. But the underlying point stands: RAG without context governance is dying. The organizations that will succeed with retrieval-augmented systems are the ones treating their knowledge bases as living, context-rich assets rather than static document dumps.

The Toll Booth Is Coming

There's a harder version of this problem emerging, and most organizations haven't noticed it yet.

Constellation Research warns that "enterprise data tolls and API economics are going to be a headache" in 2026. Celonis is suing SAP over data access. The Information reported that Salesforce is raising prices on apps that tap into its data. Connector fees are trickling down to IT budgets.

"Connection fees are going to be the new cloud egress," Constellation writes.

Here's what this means: if you don't own the context layer for your own data, you'll rent it from someone else. Every vendor building "AI-ready" connectors is essentially building a context layer on top of your data and charging you for access to the meaning of information you already own.

The Solutions Review predictions roundup makes this explicit: "By the end of 2026, connectivity, governance, and context provisioning for AI agents will be built into every serious data platform."

Built in. Not optional. Not a nice-to-have catalog project. Core infrastructure.

The organizations that treat context as someone else's problem will find themselves paying tolls to access the semantic meaning of their own customer data. The ones that invest now will own that layer.

Resolution #1: Ship Context With Every Event

The practical version of this starts at ingestion.

Every event entering your system should carry enough metadata that a reader (human or machine) can interpret it without external lookups. Not "user_id, timestamp, action" but "user_id, timestamp, action, schema_version, experiment_flags, source_system, data_classification."

This isn't aspirational. Anthropic's context engineering guide describes exactly this pattern: maintaining lightweight identifiers that allow systems to "dynamically load data into context at runtime using tools."

A transformation editor should show, live, which downstream dashboards and models will break if you drop a column. A query should surface its lineage alongside its results. A dashboard shouldn't just display a number; hovering over it should reveal the definition, the upstream tables, the freshness, and the last incident that affected it.

This requires tooling changes, yes. But mostly it requires treating context as a first-class output of every pipeline stage rather than an afterthought someone might add later.

Resolution #2: Make Context the Default in AI and Agents

TechCrunch's 2026 analysis identifies the Model Context Protocol as "quickly becoming the standard" for agent interoperability. OpenAI and Microsoft have embraced it. Google is standing up managed MCP servers.

The infrastructure for context-aware agents is arriving. The question is whether your data is ready to participate.

That means storing valid_from/valid_to timestamps on policy documents. It means tagging content with scope limitations (region, customer tier, product line). It means encoding data classification and retention rules at the source, not in a compliance spreadsheet nobody maintains.

Stanford HAI's predictions note that "2026 will hear more companies say that AI hasn't yet shown productivity increases." The organizations that do show productivity increases will be the ones whose agents can distinguish current reality from historical noise without human intervention.

An agent that refuses to take high-impact actions without verifying the environment, cohort, and guardrails is not cautious. It's correctly engineered. An agent that charges ahead on stale data with high confidence is the expensive kind of wrong.

Resolution #3: Measure Time to Trustworthy Insight

I wrote about Nicole Forsgren's new book last month. Her frameworks for developer productivity apply directly to data work, but with a crucial modification.

For data teams, the north star isn't deployment frequency or cycle time. It's time to trustworthy insight: from raw logs or events to a result you would put in front of an executive with confidence.

Most organizations can't measure this because they don't know when insight becomes trustworthy. The data arrives, transformations run, dashboards update, but confidence accrues informally. Someone senior enough eventually blesses the number based on vibes and experience.

Context infrastructure makes this measurable. If every metric carries its lineage, freshness, and incident history, you can ask: how long did it take from data landing to a metric with full provenance, no upstream incidents, and a defined owner? That's the number that matters.

When that number shrinks, you're actually improving. When people are just shipping dashboards faster without context, you're accumulating debt.

The Year We Stop Arguing About Definitions

Most New Year's resolutions fail by February. The gym membership lapses. The meditation app goes unused. The ambitious reading list gathers dust.

Data resolutions fail for the same reason: they're framed as one-time efforts rather than infrastructure changes. "We'll document our metrics" becomes a Q1 project that never gets maintained. "We'll improve data quality" becomes a dashboard that nobody checks.

Context isn't a project. It's a property of how data moves through your organization. It either travels with its story or it doesn't.

The organizations that treat 2026 as the year context becomes default will stop having the same arguments in every meeting. The exec review becomes a discussion of strategy instead of a debate about what "active users" means. The AI agent produces answers that come with their own credibility assessment. The data team ships products instead of debugging why downstream consumers don't trust the numbers.

Gartner says 60% of AI projects will fail for lack of AI-ready data. The projects that succeed will be the ones that stopped treating data as numbers and started treating it as knowledge.

That's the resolution. Make the data know what it is.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.

NOTE: I'm currently writing a book called "Zen and the Art of Data Maintenance" based on what I've seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!