One Year After DeepSeek and The $600 Billion Question

What would you do with a sum of money equivalent to 2% of the US GDP?

One year ago last month, DeepSeek dropped R1 and wiped $1 trillion off Nvidia's market cap in a single trading session. A lot of people made the leap to a big conclusion: frontier AI doesn't require unlimited compute. A Chinese startup, working under U.S. export restrictions, built a model that competed with GPT-4 at a fraction of the training cost. Architecture mattered more than hardware budgets.

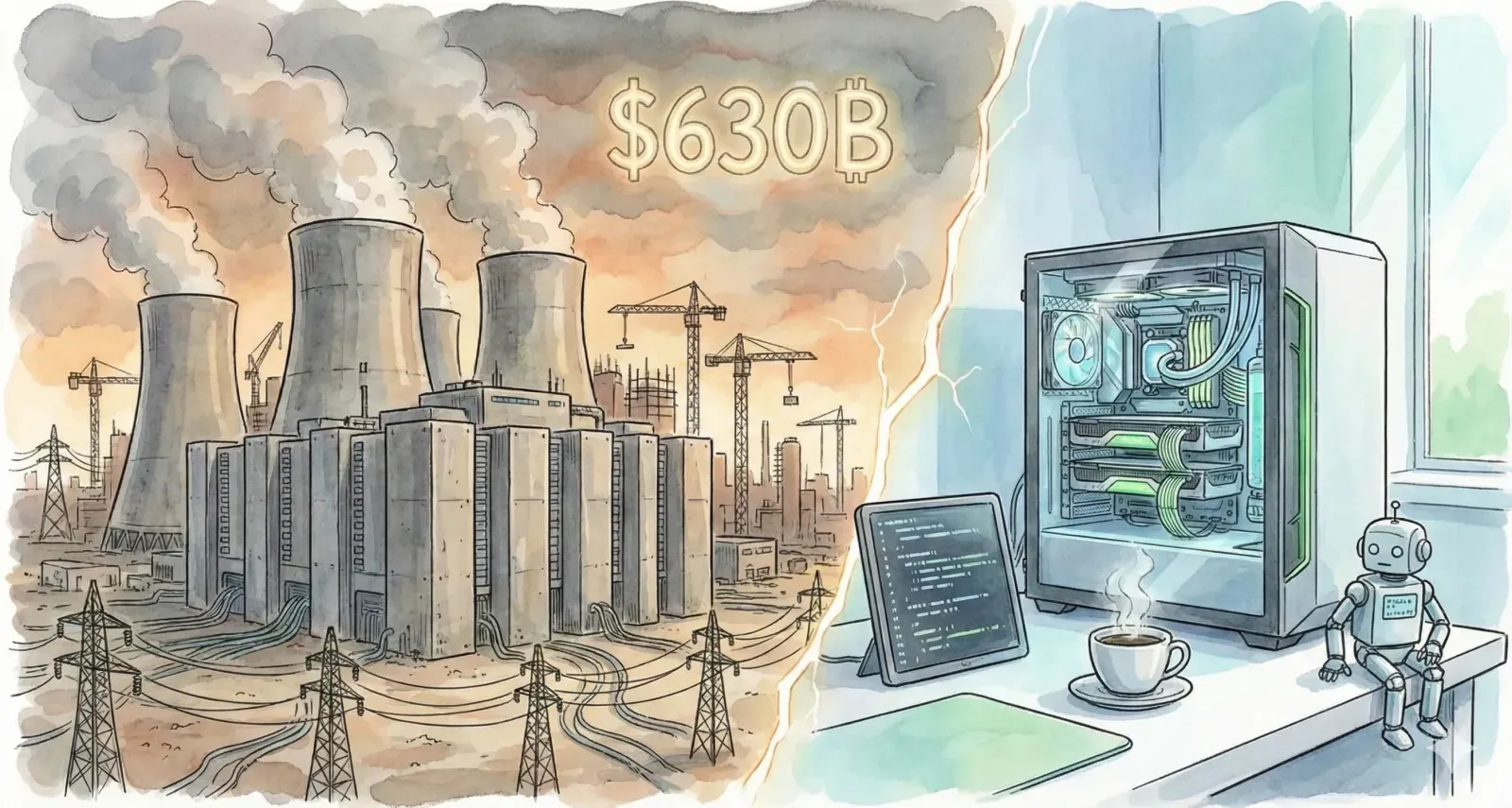

The industry clearly saw things differently. This week's earnings season confirmed $630 billion in combined capital expenditure for 2026. That's roughly 2% of projected U.S. GDP, concentrated in four companies, pointed at a different thesis: AI requires massive centralized infrastructure, and whoever builds the most wins.

DeepSeek V4 drops around February 17. Open weights. Consumer hardware. Eight days from now.

So here we are, one year later, with two radically different theories about AI infrastructure colliding in real time. The $600 billion question isn't which side is right. It's whether anyone is even asking the question.

The Numbers Got Worse

Hyperscaler capex projections have always been aggressive, but the confirmed numbers make those projections look quaint.

Amazon announced $200 billion in 2026 capex last Thursday, blowing past analyst estimates of $146.6 billion. The stock dropped almost 9% the next morning. Morgan Stanley now projects Amazon's free cash flow will go negative by $17 billion this year; Bank of America sees a $28 billion deficit. A company that printed money for a decade is about to start burning it.

Alphabet guided $175 to $185 billion, nearly doubling last year. They're raising $15 billion in high-grade bonds to help fund it. Microsoft's capex is climbing toward $123 billion. Meta committed to as much as $135 billion, and when their CFO was asked about buybacks and capital allocation, she responded that the "highest order priority is investing our resources to position ourselves as a leader in AI." Barclays estimates Meta's free cash flow will drop nearly 90%.

Wall Street is not handling this well. The S&P 500 went negative for 2026. Software stocks have shed roughly $1 trillion in market value since January 28. A Bank of America fund manager survey found 53% now call AI stocks a bubble. The market's message is unambiguous: investors will fund AI infrastructure, but they're no longer willing to do it on faith.

Meanwhile, in a Research Lab

While the hyperscalers were booking their earnings calls, DeepSeek was publishing papers.

On January 1st, they released their work on Manifold-Constrained Hyper-Connections (mHC), a method for scaling models without the gradient instability that typically forces you to throw more hardware at the problem. The core insight is almost embarrassingly elegant: instead of fighting instability with brute compute, you constrain how information flows between layers so that signals can't explode or vanish. In testing across 3B, 9B, and 27B parameter models, mHC-powered architectures outperformed baselines on eight benchmarks with only 6.27% hardware overhead.

On January 12th, DeepSeek and Peking University published Engram, a conditional memory module that separates static knowledge retrieval from dynamic reasoning. Current LLMs waste enormous computational resources "rediscovering" simple facts (like "the capital of France is Paris") through expensive neural network passes. Engram replaces that with O(1) hash-based lookups for static patterns, reserving the heavy compute for actual reasoning. Benchmark improvements of 3-5 points across knowledge, reasoning, and coding tasks. And the 100-billion-parameter embedding table? It can be offloaded to system DRAM with throughput penalties below 3%, meaning you don't even need GPU high-bandwidth memory for the knowledge retrieval portion of the model.

DeepSeek's founder Liang Wenfeng put it simply: "MoE solved the problem of 'how to compute less,' while Engram directly solves the problem of 'don't compute blindly.'"

V4 reportedly combines both innovations. One trillion total parameters, but only 32 billion active per token through sparse mixture-of-experts. Context windows exceeding one million tokens. Internal benchmarks reportedly outperforming Claude and GPT-4o on coding tasks. And it runs on dual RTX 4090s or a single RTX 5090. Consumer hardware. Open weights.

That last part deserves emphasis: organizations with strict data governance requirements could run a frontier-class model entirely within their own infrastructure, on hardware that fits in a standard workstation, without sending a single byte to an external API.

The Architectural Divergence

There are two competing theories about the future of AI infrastructure, and $630 billion is riding on which one is right.

The hyperscaler thesis says AI requires massive centralized compute, so whoever builds the biggest data centers and locks in the most power capacity wins. This thesis treats compute like oil: scarce, centralized, and controlled by whoever owns the wells. Every dollar of capex is a bet that enterprises will always need to rent someone else's GPUs.

The DeepSeek thesis says AI requires smart architecture, so whoever builds the most efficient models wins regardless of hardware budget. This thesis treats compute like solar panels: distributed, increasingly cheap, and most valuable when deployed close to where the energy is consumed. Every research paper is a bet that centralization is a temporary artifact of immature architectures, not a permanent requirement.

One thesis requires $630 billion and unprecedented power infrastructure. The other requires good ideas and the willingness to publish them.

I want to be fair to the hyperscalers. They're not stupid, and the demand for cloud AI services is real and growing. Amazon's AWS revenue was up 24% last quarter. These companies have massive backlogs of enterprise commitments. The infrastructure they're building will get used, at least in the near term.

The more interesting question is whether "it'll get used" is the same as "it's the right long-term architecture." Mainframes got used for decades after distributed computing proved more flexible, and the companies that built them weren't wrong about demand. They were wrong about the direction of the industry. The question isn't whether centralized AI infrastructure has customers today. It clearly does. The question is whether it's the foundation for the next decade, or a very expensive bridge to something more distributed.

The Revolt Is a Signal

There's a parallel story developing that the capex-obsessed coverage keeps missing.

Across the United States, a grassroots backlash against data center construction is reshaping local politics. At least 19 Michigan communities have passed or proposed moratoriums on data center development. A coalition of more than 230 environmental organizations is demanding a national moratorium. Senator Bernie Sanders has called for a federal halt on construction. The opposition crosses every political and demographic line: Republican and Democrat, rural and suburban, wealthy and working-class.

Microsoft's response tells you how serious this has gotten. In January, they unveiled a "community first" initiative where they pledged to cover full power costs, reject local tax breaks, and replenish more water than they use. Brad Smith admitted that in 2024, communities wanted to talk about jobs; by October 2025, the conversations were about electricity prices and water. "That is clearly not the path that's going to take us forward," he said about the old approach of buying land under NDAs and leaving communities in the dark.

Microsoft is investing heavily to make communities comfortable with data centers. DeepSeek is building models that might not need them at all. These two strategies could coexist for a while, but they point in very different directions.

The conventional framing treats community opposition as a PR problem to manage, a permitting obstacle to overcome. I think it's worth considering the possibility that it's actually a signal about architectural constraints. Power grids are at capacity. Water resources are strained. Residential electricity bills are climbing. The communities pushing back aren't anti-technology; they're doing straightforward cost-benefit analysis and concluding that the current balance doesn't work for them.

If AI inference can happen at the edge, on local hardware, processing local data with local power, the entire calculus changes. You're no longer asking one community to bear concentrated costs for diffuse benefits. You're distributing both the compute and the infrastructure load. No single grid hits crisis thresholds. No single community absorbs all the pain.

That's not idealism. That's just physics. Power consumption follows compute. Distribute the compute, distribute the power demand.

What We Should Be Asking

The interesting lesson of DeepSeek R1 was never "China can do AI too." It was that the link between AI progress and unlimited compute turned out to be an assumption, not a law of nature. Architectural innovation could substitute for hardware budgets. Efficiency could compete with scale. That doesn't mean it always will, but it opened a door that nobody can close.

One year later, the hyperscalers have doubled and tripled down on scale. The capex commitments made this week are 3-6x what these same companies spent in 2023. Amazon is willing to go negative on free cash flow. Alphabet is issuing bonds. Meta's CFO is telling shareholders that buybacks take a back seat to infrastructure spending. These are smart companies with deep customer relationships and massive existing demand. They may be right that centralized infrastructure is where the value accrues.

But the evidence on the efficiency side keeps accumulating too. DeepSeek's mHC and Engram papers demonstrate architectural pathways that decouple model capability from hardware requirements. V4 is poised to put a frontier-class model on consumer hardware with open weights. The gap between what efficient architecture can deliver and what centralized infrastructure is built to provide keeps widening.

75% of enterprise data is generated outside traditional data centers: IoT sensors, edge devices, mobile applications, manufacturing floors, retail locations. The current model says "move that data to where the compute is." If the compute can go anywhere, the physics of data gravity reverse. You don't ship data to the model; you ship the model to the data. Compliance becomes simpler because regulated data never crosses jurisdictions. Latency drops because inference happens locally. Cost structures transform because you eliminate egress fees, GPU rental, and data transfer charges entirely.

Eight Days

DeepSeek V4 is expected around February 17th. Open weights. Consumer hardware. Architectural innovations that separate memory from reasoning and stabilize training at trillion-parameter scale.

One year ago, R1 proved that frontier AI doesn't require frontier budgets. The industry shrugged and wrote $630 billion in checks. Next week, V4 is going to make the same argument with better evidence, stronger architecture, and an even more pointed contrast with Western spending.

The question I keep coming back to: what would it take for the industry to treat architectural efficiency not as a curiosity from a Chinese lab, but as a legitimate alternative path? Not necessarily the only path. Just one worth serious investment alongside the centralized buildout.

The hyperscalers are betting you'll always need them. DeepSeek is betting you won't. Both sides have evidence. Both sides have blind spots.

But $630 billion is a lot of money to commit to one side of a bet that's still very much open.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!