The Anthropic $1.5 Billion Settlement Nobody's Reading Right: Your Enterprise Data is a Ticking Time Bomb

Anthropic's $1.5B settlement underscores data legal risks; prioritize data rights and use secure, governed datasets over external sources

September 18, 2025

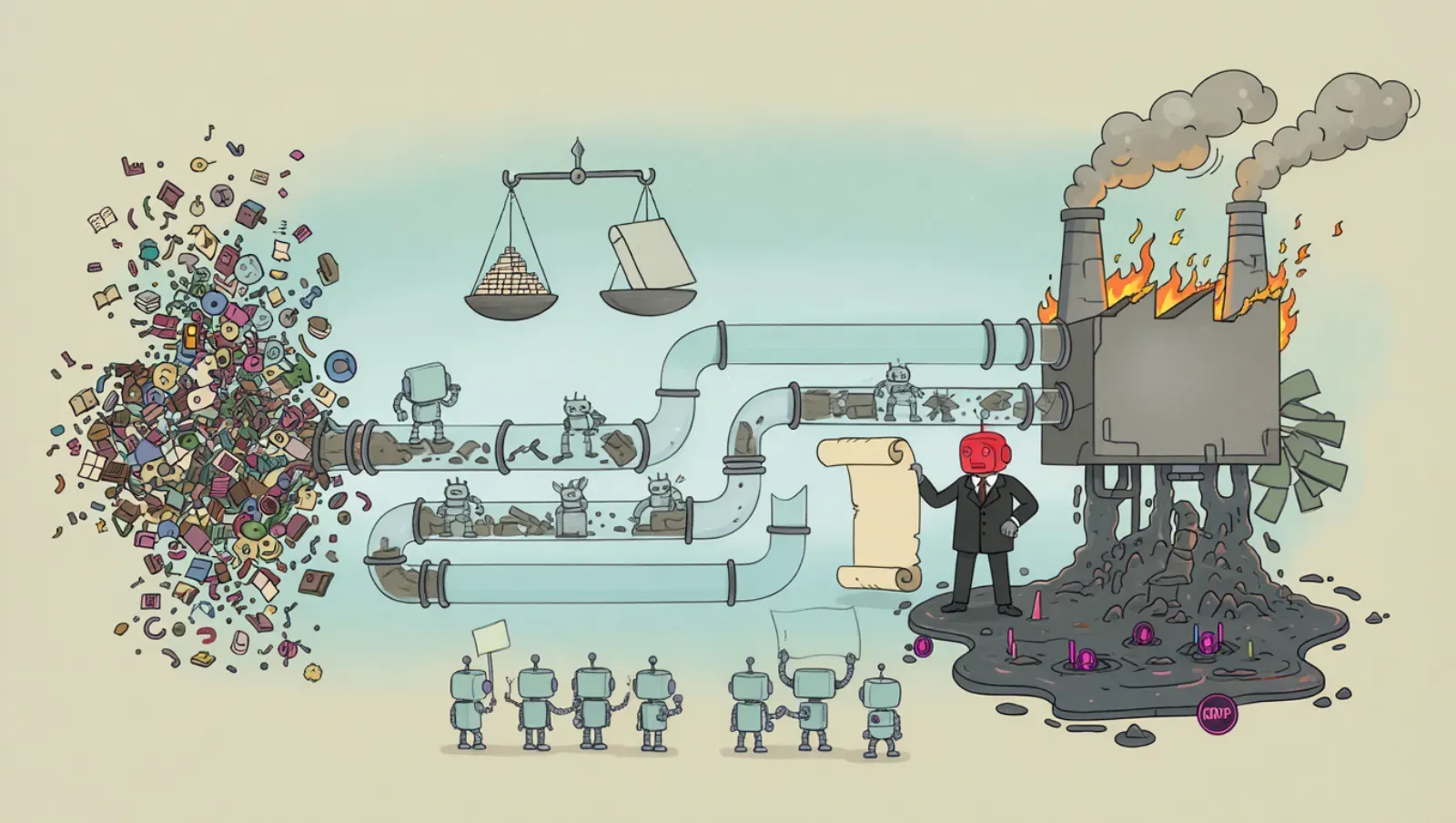

Anthropic just paid $1.5 billion to settle with authors and copyright holders - the largest copyright payout in U.S. history. After a judge found they'd illegally downloaded millions of books from online "shadow libraries," the message is clear: the age of consequence-free data acquisition is over.

For tech companies, it's a warning shot. For enterprises, it should be a red siren: Do you have rights to the data you're training on?

The Turning Point

This isn't some scrappy startup getting slapped. Anthropic is the careful one, the ethics-focused one, the one backed by Google with billions in funding. Still got caught.

The plaintiffs' argument was simple: these models work because they trained on copyrighted content without permission or payment. Sound familiar? It should. Right now, your enterprise is probably doing the same thing.

Consider: the web scraping market hit $1.03 billion in 2025. According to ScrapeOps, 42% of enterprise data budgets now go to external data collection. Nearly half your data spend is on acquiring data you don't own, can't verify, and might not legally be able to use.

Your Hidden Liability

Every company building a "data moat" is actually risking building a legal time bomb.

First, you don't know what you're scraping. That LinkedIn data? Those customer reviews? The competitor intel from behind a registration wall? All potential violations of GDPR (€20 million fines), CCPA ($7,500 per violation), or CFAA (actual criminal charges).

Even paid data has traps. You license weather data, put it in your model, ship the model in an app, distribute through app stores - congratulations, you've probably violated the original license.

Second, you can't verify provenance. When Anthropic's lawyers meet the plaintiffs, they at least know what they're accused of using. But in your data lake, can you prove which data came from legitimate sources? Whether it contained personal information? What copyright restrictions apply?

OpenAI now reveals training data to attorneys only in a secure room, no devices, no internet - treating data sources like nuclear codes. Meanwhile, your team dumps everything into Snowflake and calls it done.

The $15 Million Problem

Here's the kicker: while you're scrambling for external data, 68% of your internal data sits unused. You're taking legal risks to acquire what you don't own while ignoring two-thirds of what you do.

The real costs of dark data:

Compliance Risk: "We didn't know it was there" won't save you from GDPR fines.

Security Nightmare: Forgotten data isn't in your governance docs, but hackers will find it.

Storage Bleeding: $50K monthly to store data you've never queried.

Opportunity Cost: That sensor data could predict failures. That transaction data could prevent churn. Instead, you're scraping competitor prices.

Productivity Drain: Your data scientists spend 40% of their time just finding data. PhD-level hide and seek.

The Reckoning

The Anthropic settlement isn't isolated. OpenAI faces twelve consolidated suits. Canadian news organizations are suing over scraped content. This is the new normal.

AI companies at least know the game - teams of lawyers, documented pipelines, clear data lineage. Your enterprise? Workers waste 1.8 hours daily searching for data they can't find. If your own employees can't locate it, how will your legal team defend it?

Between 60-73% of enterprise data goes unused for analytics. It's ungoverned, unmonitored, unknown. When regulators come - and they will - you'll need to explain petabytes of data you can't even identify.

The Path Forward

The settlement teaches three lessons:

- Rights matter more than access. Technical capability isn't legal permission.

- Unknown data is toxic data. Every byte is either an asset or a liability. Guess which yours is.

- Internal first, external second. Before spending another dollar on external data, know what you own, where it lives, who's accessed it, and how to use it without moving it.

The Real Solution

Stop acquiring. Start orchestrating.

The survivors won't have the most data. They'll have:

- Proven ownership

- Complete lineage from source to insight

- Intelligent orchestration without wasteful movement

- AI-ready processing where data lives

This requires intelligent pipelines that track everything from millisecond one. Know your rights to every byte. Process data where it lives instead of centralizing into a swamp. Make it AI-ready from the start.

(Yes, this is what we're building at Expanso - because someone has to.)

Your competitive advantage isn't in scraping competitors. It's in the 68% of data you're ignoring - data you own, understand, and can legally use. But only if your pipelines are intelligent enough to handle it.

The Anthropic settlement marks the end of "grab everything, figure it out later." The question isn't whether you'll face your data reckoning. It's whether you'll be ready when it arrives.

Or whether you'll be explaining to regulators why you have 500TB of data you can't identify, can't govern, and can't delete.

Choose wisely: intelligent orchestration or expensive litigation.

Is your data an asset or a liability? Can you prove ownership of every dataset in your lake?