Edge ML Has a Size Obsession

The industry spent three years compressing models when most edge deployments never needed neural networks. The real bottleneck isn't model size—it's deployment orchestration.

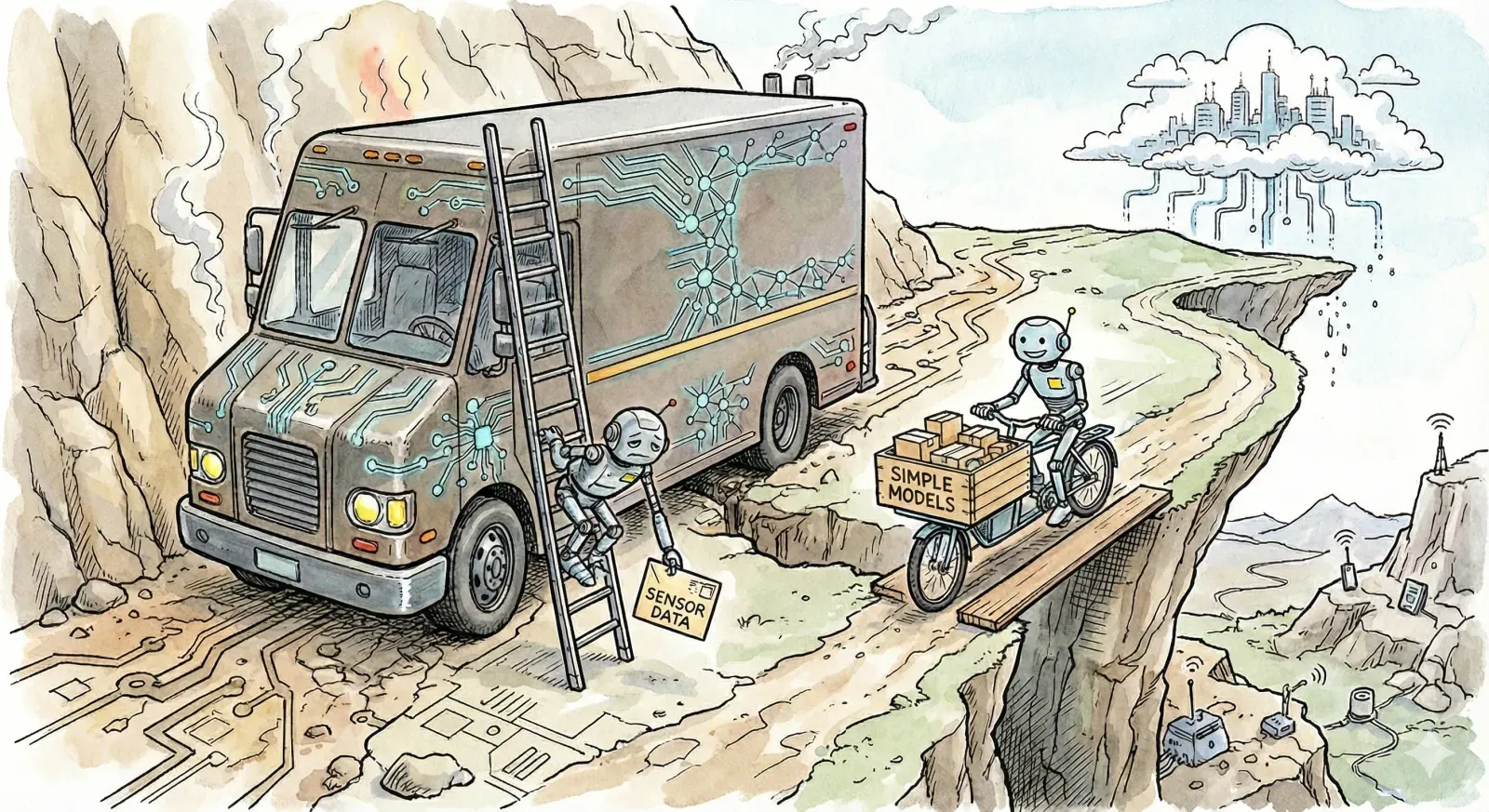

UPS could deliver your Amazon package on a cargo e-bike. In most cities, for most packages, this would actually be faster. No parking. No traffic. Straight to your door.

Instead, a 16,000-pound truck idles outside your apartment building while the driver walks up three flights of stairs with an envelope containing a phone charger.

It's not that UPS is stupid. The truck handles the complicated cases: bulk deliveries, heavy items, and commercial routes with 200 stops. Once you've built infrastructure for complex cases, running it for easy cases feels free. Same truck, same driver, same route. Why optimize?

But "feels free" isn't free. The truck burns diesel at idle. It needs a commercial parking spot that doesn't exist. The driver spends 30% of their day not delivering packages but managing the logistics of operating a vehicle designed for a more complex problem than most stops actually present.

Edge ML has the same problem. We built the infrastructure for complex cases (language models, multimodal reasoning, generative AI), and now we're using it for everything. Sensor classification? Deploy a neural network. Anomaly detection? Fine-tune a transformer. Predictive maintenance? Surely this needs deep learning.

A quantized Llama 3B takes 2GB on disk and 4GB in memory. A 4-bit quantized 7B model still needs roughly 4GB. Want to run a 70B model? Even with aggressive quantization, you're looking at 35GB minimum.

A scikit-learn random forest for the same classification task takes 50KB.

The industry spent three years figuring out how to squeeze the truck into tighter parking spaces. Most deliveries never needed the truck.

Two Mistakes, Not One

The size obsession hides two distinct problems. First: teams often reach for the wrong vehicle entirely. Second: even with the right vehicle, the route planning determines whether packages arrive.

Most edge deployments handle predictive maintenance, anomaly detection, sensor classification, and quality control. These are tabular data problems. A NeurIPS 2022 paper confirmed what practitioners already suspected: tree-based models such as XGBoost and Random Forests outperform deep learning on tabular data across 45 benchmark datasets. A study on industrial IoT found that XGBoost achieved 96% accuracy in predicting factory equipment, reducing downtime by 45%. Random forests hit 98.5% on equipment failure classification.

TensorFlow Lite Micro fits in 16KB. TinyML gesture recognition runs at 138KB and 30 FPS. These aren't compromised models. They're right-sized for their problems. The e-bike, not the truck.

But here's what matters more: whether you're dispatching e-bikes or trucks, you need routes that work. And route planning is why 70% of Industry 4.0 AI projects stall in pilot.

The models work in demos. Deployment breaks them.

The Orchestration Gap

In the early days of Kubrenetes, we made a version of this mistake. We thought container scheduling was the hard part. The hard part was everything after scheduling: networking, storage, observability, updates, rollbacks. The entire operational lifecycle.

Edge ML is learning this lesson now. Where MLOps ends with a packaged model, orchestration begins. And orchestration is where edge ML goes to die.

Think about what makes delivery logistics hard. It's not the vehicles. It's coordinating thousands of them across changing conditions. And when you're operating with vehicles/artifacts that are too big for the use case, you're just making everything harder.

Model staleness hits regardless of model size. Edge models, once deployed, might not be frequently updated. A classifier trained on 2024 patterns doesn't recognize 2025 anomalies. Rolling out updates across thousands of devices is nontrivial, whether you're pushing 50KB or 5GB. This is the equivalent of route maps that don't know about new neighborhoods. Your drivers show up, but they can't find the addresses.

Fleet heterogeneity compounds everything. Devices don't update uniformly. You end up managing fragmented fleets in which different nodes run different model versions with varying capabilities. Cloud deployments update in minutes. Edge deployments take weeks, sometimes months. Some devices never update at all. Imagine dispatching trucks, vans, and bikes from the same warehouse without a unified system that tracks which vehicle has which capabilities. Version skew creates subtle bugs that only manifest at scale.

Energy constraints create hard limits that benchmarks ignore. Thermal throttling kicks in when you stress mobile CPUs. Even a small model running continuous inference drains batteries and generates heat. Academic papers report Joules per prediction. Users report their phone dying by 2pm. The diesel cost that accumulates invisibly on every route, whether you're carrying one envelope or a hundred boxes.

Network variability breaks every cloud-native assumption. Traditional MLOps assumes stable, high-bandwidth connections. That assumption doesn't hold when inference pipelines need to survive outages, intermittent connectivity, or bandwidth that costs real money. What happens when your edge device goes offline for a week? When does it reconnect with stale models and queued data? It's like planning routes that assume every road is always open. The moment a bridge closes, your whole system breaks.

The Data Pipeline Problem

This is where "feels free" really isn't free. Edge ML isn't failing because models are too big. It's failing because data pipelines weren't designed for bidirectional flow.

Delivery networks learned this decades ago. Packages flow out from warehouses, but returns flow back. Damage reports flow back. Delivery confirmations flow back. Reverse logistics are just as important as forward logistics, and often harder.

The traditional ML assumption:

Edge Device → Cloud → Inference → Response

What edge ML actually needs:

Edge Device ↔ Local Inference ↔ Selective Sync ↔ Model Updates ↔ Back to Edge

This bidirectionality creates problems most teams don't anticipate. As IBM's edge deployment guide notes: "In an edge deployment scenario, there is no direct reason to send production data to the cloud. This may create the issue that you'll never receive it, and you can check the accuracy of your training data. Generally, your training data will not grow."

Your model improves based on the data it sees. If that data never leaves the edge, your model never improves. But if all data goes to the cloud, you've rebuilt the centralized architecture you were trying to escape, with extra latency and bandwidth costs. It's like routing every return through your main distribution center instead of handling them at local hubs. Technically correct, but operationally a nightmare.

Edge-to-cloud ETL pipelines are emerging as critical infrastructure. They need real-time ingestion, adaptive transformation, graceful degradation when connectivity fails, and respect for data sovereignty constraints. A 50KB model and a 5GB model face identical challenges here. The pipeline doesn't care about parameter count, just like the route doesn't care whether you're driving a truck or riding a bike.

What Actually Works

The teams succeeding with edge ML have stopped optimizing vehicles and started optimizing routes.

Tiered inference separates quick decisions from complex reasoning. Vector search at the edge runs in 5-10ms using in-memory indexes. No GPU required. Simple classifications and caching happen locally. Complex reasoning routes selectively when network allows. This is the e-bike for last-mile delivery, the truck for bulk warehouse transfers. Match the vehicle to the delivery, not the other way around.

Edge MLOps mirrors replicate minimal cloud capabilities locally. When the network disappears, edge nodes still manage model lifecycle, handle updates from local cache, and queue telemetry for later sync. This approach acknowledges what cloud-native architectures ignore: networks fail. Devices go offline. The question isn't whether your deployment loses connectivity. It's whether it keeps working when it does. Local dispatch centers that function when headquarters goes dark.

Data locality as first principle means processing where data lives, not where servers are convenient. By 2025, over 50% of enterprise data will be processed at the edge, up from 10% in 2021. This shift is already happening in manufacturing, retail, healthcare, and logistics. Organizations adapting successfully treat edge deployment as first-class infrastructure, building intelligent data orchestration that moves compute to data rather than data to compute. Deliver from the nearest warehouse, not the central hub.

Selective synchronization solves the training data problem. Not all edge data needs to reach the cloud, but representative samples do. Anomalies do. Edge cases that challenged local models do. Smart filtering at the edge, with policies that adapt based on model confidence and data novelty, keeps training pipelines fed without overwhelming bandwidth or centralized storage. Send back the damage reports. Don't send back confirmation that every package arrived fine.

This is exactly why we built Expanso around data orchestration rather than model serving. The model isn't the bottleneck, whether it's a 50KB decision tree or a 4GB quantized LLM. The bottleneck is getting the right data to the right place at the right time, coordinating updates across heterogeneous fleets, and maintaining observability when half your nodes are intermittently connected. Our approach treats edge nodes as first-class participants in data pipelines, not afterthoughts bolted onto cloud architectures. Route planning, not vehicle engineering.

Where This Is Heading

$378 billion in projected edge computing spending by 2028. IDC expects edge AI deployments to grow at 35% CAGR over the next three years. That investment isn't going into building better trucks. The quantization problem is largely solved. The money is going into the logistics layer that makes edge deployment actually work.

Federated learning is moving from research curiosity to production requirement. It's the only practical way to improve models from edge data without centralizing that data, solving the training feedback loop that IBM's guide warned about. Standardized edge-cloud orchestration protocols are emerging to simplify deployment across heterogeneous environments. The security surface is expanding dramatically as AI distributes across thousands of devices rather than sitting in secured data centers.

The companies navigating this successfully aren't the ones with the smallest vehicles or the fastest engines. They're the ones who recognized early that vehicle optimization was table stakes, not competitive advantage. The hard problems were always about fleet management, route planning, package tracking, and graceful degradation when conditions change.

The Right Questions

Not "how do I compress my neural network to fit on edge hardware?"

Start with "what's the simplest model that solves my actual problem?" For sensor data, that's often a decision tree. Kilobytes, not gigabytes. Proven to outperform neural networks on tabular data. For language tasks, yes, you need transformers. Adobe's SlimLM shows what's possible: 125M-1B parameters, document assistance on smartphones.

Then ask "can my infrastructure actually deploy and maintain this?" Can you push updates to a fragmented fleet? Can your edge nodes operate when disconnected? Does your data pipeline support bidirectional flow? Can you monitor inference quality across thousands of distributed nodes?

The size obsession missed the point twice: once by reaching for complex models when simple ones work better, and again by focusing on compression when deployment was the actual bottleneck.

UPS isn't going to start delivering envelopes on e-bikes anytime soon. The truck infrastructure exists. The routes are planned. The drivers are trained. Switching has costs.

But if you're building edge ML from scratch, you get to choose. You can build the truck fleet because trucks are what serious logistics companies use. Or you can look at what you're actually delivering and pick the right vehicle for the job.

A 50KB model that deploys beats a 50MB model that doesn't. But even an e-bike needs a route that works.

The edge isn't where ML projects go to die. It's where the logistics need to grow up.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!