The Fairwater Paradox: Microsoft Built a Monster That Needs 900TB/Second of USEFUL Data

Microsoft's Fairwater AI datacenter needs 900TB/second of data, facing challenges in efficiency, storage, and data quality management

OK, let's talk about Microsoft's new Fairwater "AI factory,” (The quotes here are doing a lot of work… do we REALLY need a new name for this? It’s so dumb). They're calling it the world's most powerful AI datacenter. Cool. Millions of GPUs. Liquid cooling. Storage stretching five football fields.

Here's what they're NOT telling you: the math on utilization is going to be BRUTAL.

If these chips ran at full capacity, they'd need to consume an equivalent the entire internet's daily data volume every 3 hours. And if even 20% of that data is noise? Congratulations, you just set $100 million on fire.

The Bandwidth Monster Nobody Wants to Talk About

I've been lucky enough to be around super high scale infrastructure at Google, AWS, and Microsoft. I have seen what these systems need. And I'm telling you: Microsoft's announcement is missing a huge part of the most important part of the story.

Let me show you the math they conveniently left out.

NVIDIA's GB200 chips - the beating heart of Fairwater - have approximately 8TB/s of memory bandwidth each. That's:

- Bandwidth per chip = 8 × 10¹² bytes/second. - Microsoft says they have "millions" of these processors. Let's be EXTREMELY conservative and say just 100,000 GPUs are actually running at any given time (probably 10% of what's really there):

- Aggregate bandwidth = 100,000 × 8TB/s = 800 PB/s - But here's the thing about GPUs – they're never at 100% utilization. Why? Because we can't feed them fast enough. Industry standard for well-optimized training? About 30-40% MFU (Model FLOP Utilization). Let's be generous and say 50%:

- Required data throughput = 800 PB/s × 0.5 = 400 PB/s

For context, the entire internet generates about 400 exabytes daily. That's: Internet data rate = 400 EB/day = 400,000 PB/day = 4.6 PB/s

Do you see the problem? Fairwater needs 87× the entire internet's data generation rate.

"But David, They Won't Run at Full Capacity!"

Fair point! Let's talk about realistic utilization.

Microsoft isn't running one giant training job. They're running hundreds of customers. Think of it like a hotel – you never have 100% occupancy, but you need enough capacity for peak times.

Industry reports suggest hyperscaler GPU utilization averages:

- Training clusters: 40-60% time-utilized

- Within that time: 30-40% actual compute utilization

- Effective utilization: 12-24%

So let's recalculate with realistic multi-tenant scenarios:

Realistic throughput = 800 PB/s × 0.6 (time util) × 0.35 (compute util) = 168 PB/s

Better? Sure. Still completely insane? Absolutely.

That's still 36× the entire internet's data rate. And remember – this is for 100,000 GPUs. Microsoft has MILLIONS.

The Storage Systems Are a Bottleneck, Not a Solution

Microsoft proudly announced that each Azure Blob Storage account can handle 2 million transactions per second.

Let's be generous and assume 1MB per transaction:

Storage bandwidth per account = 2M × 1MB = 2TB/s

To feed our realistic scenario:

Required storage accounts = 168 PB/s ÷ 2TB/s = 84,000 accounts

And they need to be perfectly coordinated. Zero latency. No hot spots. No network congestion.

Have you ever tried to coordinate 84,000 anything? I helped launch Google Kubernetes Engine. Coordinating 1,000 nodes was hard. 84,000 storage accounts? That's not engineering. That's prayer.

The 20% Waste Catastrophe (Or: How to Burn a Billion Dollars)

Here's where it gets REALLY expensive.

Training GPT-4 reportedly cost $100 million. But that assumes relatively clean data. What happens in the real world?

Industry data quality breakdowns:

- 5-10% exact duplicates

- 10-15% near-duplicates (same content, different format)

- 5-10% mislabeled or corrupted

- 5-10% non-compliant (copyright, PII, etc.)

- 10-20% low-quality or irrelevant

Let's be conservative: 20% problematic data.

You might think: "OK, 20% waste = $20M lost on a $100M run."

WRONG.

Bad data compounds:

- Direct waste: 20% of compute = $20M

- Overfitting from duplicates: Requires 1.5× more epochs = +$50M

- Model degradation: Retraining after discovering issues = +$100M

- Legal exposure: Ask Anthropic about their $1.5B settlement

Real cost of 20% bad data:

Total cost = $100M × 2.7 = $270M

At Fairwater scale with multiple customers?

Annual waste = $270M × 20 customers × 4 runs/year = $21.6 BILLION

That's not a rounding error. That's the GDP of Iceland.

Why Every Percentage Point Is Now Existential

Let me put this a different way:

Cost of 1% inefficiency at Fairwater scale:

- 1% of 168 PB/s = 1.68 PB/s wasted

- 1.68 PB/s × 86,400 seconds/day = 145 EB/day wasted

- At AWS transfer pricing of $0.02/GB:

- Daily transfer waste = 145M GB × $0.02 = $2.9M

- Add compute at $500/GPU-hour:

- Daily compute waste = $12M

- Annual waste from 1% inefficiency = $5.4 BILLION

Scale that to 20% inefficiency? $108 billion annually. That's more than the entire AI investment of 2024.

The Physical Impossibility Nobody Wants to Admit

Let's zoom out to Earth-scale constraints.

Global data generation (per IDC and Seagate):

- Total: ~400 EB/day

- Video streaming: 60%

- Backups/replicas: 15%

- Encrypted personal: 10%

- Business data: 10%

- Useful for AI: ~5% = 20 EB/day

Fairwater's consumption rate for useful data:

Time to exhaust Earth's daily AI data = 20 EB ÷ 168 PB/s = 119 seconds

That’s right … two minutes.

One Fairwater facility could consume humanity's entire daily useful data production in the time it takes to make amazing instant ramen.

And, of course, Microsoft is building multiple identical facilities - but we’re not making more data more useful faster.

The Intelligent Pipeline Revolution (Or: How to Not Go Bankrupt)

You can't engineer your way out of physics. Microsoft solved the compute problem with brute force:

- Liquid cooling systems

- Federal land grants for power

- Billions in infrastructure

But data doesn't work like that. You can't make photons go faster. You can't compress incompressible information. You can't violate causality (sorry, quantum computing isn't helping here).

The ONLY solution is intelligence at the pipeline level.

The Math of Smart vs. Dumb Pipelines

Dumb Pipeline (this is the default):

- Collect everything: 100TB raw data

- Transfer cost: 100TB × $0.02/GB = $2,000

- Storage cost: 100TB × $0.023/GB-month = $2,300

- Compute cost: 200 GPU-hours × $500 = $100,000

- Discover 70% was garbage

- Retrain with clean data: +$70,000

- Total: $174,300 for 30TB useful output

- Cost per useful TB: $5,810

Intelligent Pipeline (What We Probably Should All Do):

- Pre-filter at source: 100TB → 40TB relevant

- Deduplicate: 40TB → 30TB unique

- Validate compliance: Remove 5TB risky data → 25TB

- Compress: 25TB → 20TB transferred

- Transfer cost: 20TB × $0.02/GB = $400

- Compute cost: 40 GPU-hours × $500 = $20,000

- Total: $20,400 for 25TB useful output

- Cost per useful TB: $816

Efficiency gain = 86%

At Fairwater scale, that's the difference between profit and bankruptcy.

Where Could I Get Such an Intelligent Data Pipeline??

OK, I know I’m highly biased here (full disclosure: I'm CEO of Expanso). I work for a company that builds platforms for intelligent data pipelines. So yeah, I have a horse in this race.

But don’t believe me - do the math yourself

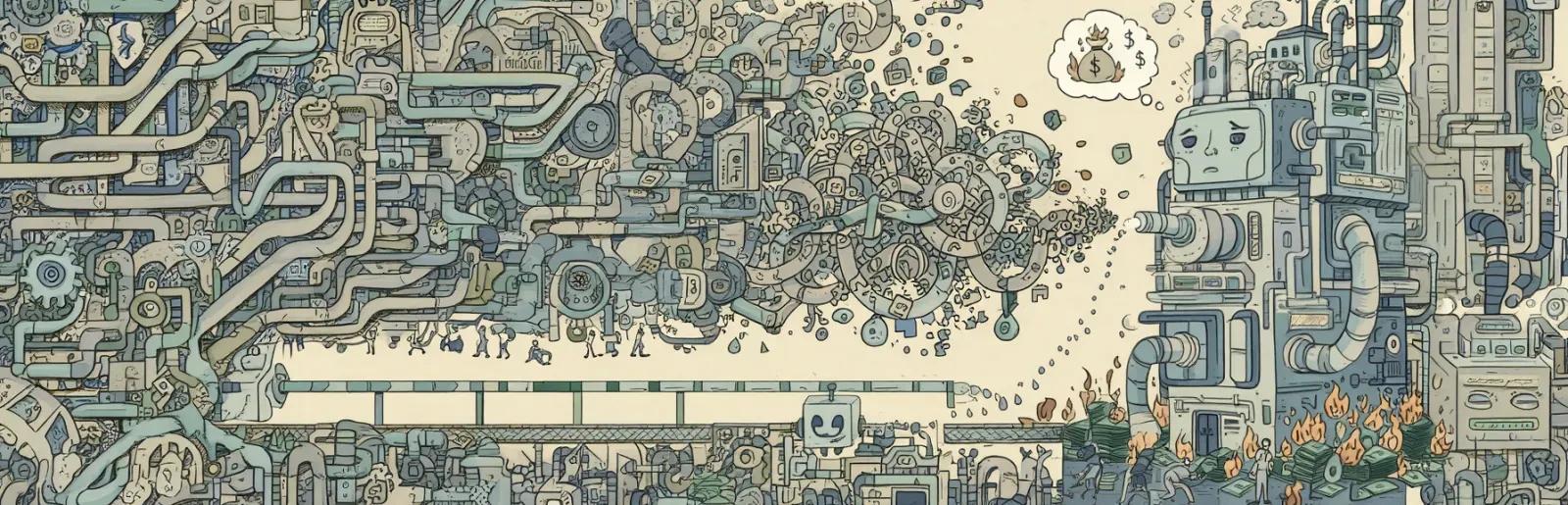

Microsoft built the world's most powerful digestive system. Beautiful engineering. Truly impressive. But without the data in the right size, shape, and location, you’ve got a race car with no gas.

Intelligent data pipelines are the missing piece. The preprocessing layer that:

- Filters out the 60% that's garbage

- Deduplicates the 15% that's redundant

- Validates compliance BEFORE you get sued

- Compresses intelligently (not everything benefits from gzip, people)

- Scores quality so you process the good stuff first

This isn't about replacing Fairwater. It's about making it economically viable.

Because here's the truth: without data, Fairwater is a $10 billion paperweight.

The Future Is Filtered (Or There Is No Future)

Microsoft showed us what's physically possible. Millions of GPUs. Exabytes of storage. Liquid cooling that could chill a small city.

But physics doesn't care about your engineering prowess. The speed of light is non-negotiable. Information theory is a harsh mistress. And data, unlike compute, can't be created from nothing.

The next phase of AI isn't JUST about bigger factories. It's about pairing them with smarter plumbing.

Otherwise, we just built the world's most expensive space heater.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. But definitely do the math yourself. Please. For the love of all that is holy, do the math.

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!