The Shift Left Nobody's Talking About: When Your Data Stack Becomes the Next X-Acto Knife

Data’s 1971 Moment

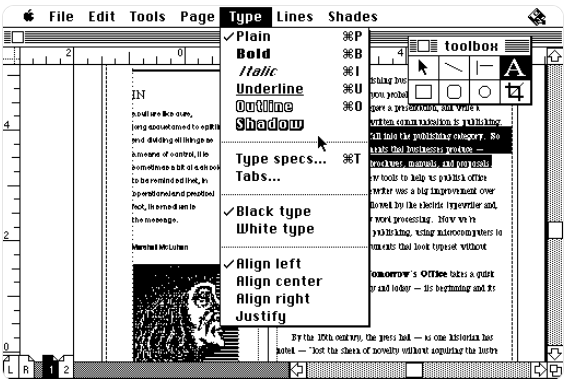

In 1971, knowing how to assemble a magazine spread with an X-Acto knife was a career-defining skill. Art directors spent years mastering the geometry, the wax, the precise cuts. Then desktop publishing arrived.

By 1985, Aldus PageMaker shipped on a floppy disk for the Mac, and the decades-old craft became an artifact.

Five years ago, assembling a production-ready UI in a week was considered efficient. Today, your PM can generate something functional in an afternoon with AI-driven design tools. Same polish? Not always. But good enough to test with users and out-iterate competitors.

Every technical revolution sorts people into two camps: those who recognize the shift and move forward, and those who defend the old gates. Right now, data infrastructure is having its 1971 moment. Most people just haven’t noticed.

The Pattern: Everything Shifts Left

Software development is a story of capabilities being pulled leftward in the lifecycle.

- Testing shifted left from QA teams to developers writing tests as they code.

- Security shifted left via DevSecOps, embedding checks directly into CI/CD.

- Operations shifted left through DevOps and SRE, giving developers deployment and monitoring power.

Every shift triggered the same objections: “Quality will tank.” “We need specialists.” “We’ll lose control.” And every time, the economics steamrolled the resistance. Faster teams won.

AI is the ultimate shift-left accelerant. PMs and designers generate working code. Operators query systems in plain language. Front-line workers access analyses that used to require analysts.

But here’s the absurd part: while everything else shifts left, data infrastructure remains stubbornly centralized. Developers can spin up a Kubernetes cluster in minutes, but moving data still requires tickets, ETL approval chains, and pipeline backlogs.

One of The Last Holdouts: Centralized Data Infrastructure

For a decade, data warehouse vendors sold a simple pitch: “Send everything to us, and we’ll make sense of it.” For a while, it worked. Bandwidth was costly. Compute was centralized. Machine learning lived in research labs.

That world is dead. Three forces killed it:

1. Distributed compute became real

Forget the IoT hype cycle. If two machines share an ethernet cable, you have an “edge.” And it’s running meaningful work—model inference, sensor processing, operational decision-making.

Ships. Satellites. Factory floors. Retail stores. Vehicles. These aren’t toy deployments. They’re production systems. Waiting to ship raw data back to a warehouse isn’t slow—it’s impossible.

2. Bandwidth costs refuse to scale with data volume

A 4K camera using H.265 at 4 Mbps generates:

- 43.2 GB/day

- ~1.3 TB/month

Per camera.

Multiply by thousands of locations, plus logs, telemetry, and app data. The economics collapse. You can’t “just centralize it.” You’d burn your budget in transit fees before the data even lands.

3. Regulations make centralization legally dangerous

GDPR fines hit €20M or 4% of global revenue—whichever is higher.

Data sovereignty laws proliferate.

Local processing isn’t an optimization. It’s compliance.

Meanwhile, 71% of enterprise data is never used, according to Gartner. It goes from generation to archive without a single meaningful interaction.

We built a system optimized for collecting, moving, and storing data—and not for understanding it. That system is failing.

The Resistance: Skills vs. Power

Two groups resist the shift left, for different reasons.

Skills-Based Resisters

These are the ETL specialists, pipeline magicians, and warehouse optimizers who built their careers on mastery of centralized systems. Now AI-assisted tools generate transformations in minutes. Yes, quality varies. So did early desktop publishing layouts. It didn’t matter.

Power-Based Resisters

More interesting—and more threatened.

If your authority comes from controlling the central data bottleneck, what happens when 80% of processing moves to the edge? You’re no longer the gatekeeper. You’re no longer the checkpoint. You're no longer the control point at all.

Resistance isn’t strategy. It’s denial. The shift is already underway.

What Must Actually Change

Processing must move to where data is generated. Not entirely—central warehouses are still essential—but the ratio must invert. Instead of 90% of ETL happening centrally, 80% needs to happen at the edge.

Local systems should handle:

- Schema validation

- Compression and filtering

- Enrichment

- Context stitching

- Bandwidth-aware routing

Central systems should receive clean, contextualized, purpose-built data.

Why it matters for AI

Models trained without context are models trained on noise.

A temperature reading of “71 degrees at 4 a.m.” is meaningless without geography. In Dubai, that’s frigid. In Atlanta, that’s warm. Strip context at the source and it can never be recovered.

The next-generation architecture is simple:

Local processing, centralized policy.

Edge teams execute; central teams architect.

We’re already seeing customers push 25,000 metrics/second through distributed pipelines, processing locally and forwarding only what matters. The bandwidth savings alone justify the shift. The latency improvements unlock new use cases. Data quality finally matches operational reality.

Evolve or Defend

Central data teams won’t disappear. They’ll evolve—just like Ops teams evolved into SRE.

They’ll become:

- Policy setters, not gatekeepers

- Architectural stewards, not pipeline plumbers

- Exception handlers, not executors

Edge teams gain autonomy, responsibility, and the burden of doing it right. Shift-left always distributes accountability.

But pretending the old system will hold? That’s delusion.

Paste-up artists didn’t lose to better tools; they lost to a better model of work. The same is happening to centralized data engineering.

The shift left is here. Your only choice is whether you’ll help build the new system or waste your time defending the old one.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!