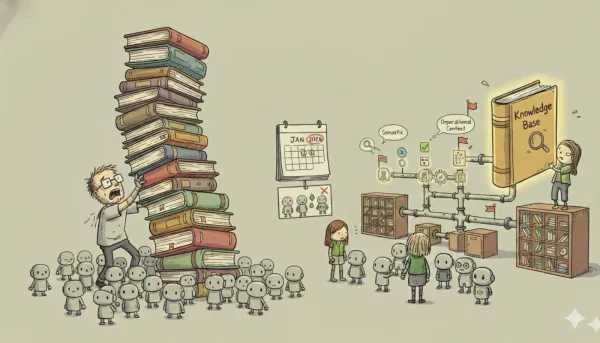

On December 15, 2025, NVIDIA acquired SchedMD, a 40-person company based in Lehi, Utah. The price wasn't disclosed, the press release emphasized a commitment to open source, and most coverage focused on NVIDIA’s expanding software portfolio, thereby missing the point entirely. Most folks missed how huge this