The Real Silicon War Isn't About GPUs: Why Everyone's Fighting Over Boring Chips

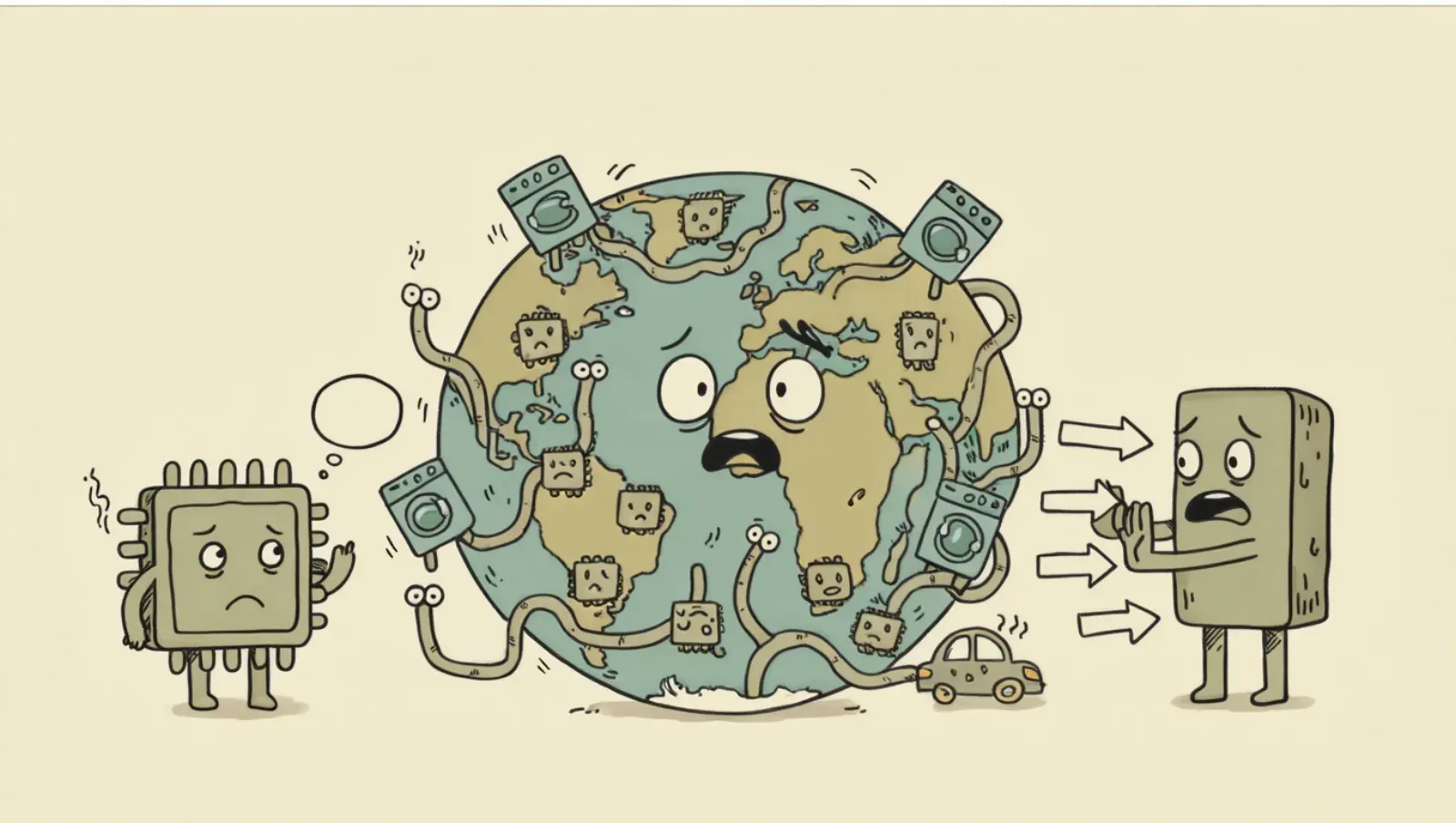

There's a wave swelling up in semiconductors right now. Not the sexy AI chips that suck down kilowatts like a man lost in the desert at an oasis. Not the bleeding-edge 3nm processors that cost more than your house to fab. I'm talking about the chips that nobody writes breathless articles about - the ones that actually make the world work.

This week, the Dutch government seized control of Nexperia, a Chinese-owned semiconductor company based in the Netherlands. Not because they make cutting-edge AI accelerators. Not because they're pushing the boundaries of Moore's Law. But because they make the boring-ass chips that go into cars and washing machines.

And you know what? They're absolutely right to freak out about it.

The Numbers. The Very Big Numbers.

While NVIDIA's H100s get all the press, there are billions of edge AI chips shipping right now that you've never heard of. Ambiq alone has shipped 270 million ultra-low power chips. That's not a typo. 270 million.

The edge AI chip market is projected to grow at a 33.9% CAGR through 2030. We're talking about a market that's going from $15 billion to over $100 billion in five years. Why? Because AI isn't just happening in data centers anymore - it's happening everywhere.

The numbers look huge too:

- 30x energy efficiency gains: Ambiq's Apollo510 delivers AI inference at 10-30 milliwatts. Your smartphone uses more power lighting up a single pixel.

- $2-10 chips running real AI: These aren't toy processors. DeepX's DX-V1 runs YOLOv7 object detection at 30fps on 1-2 watts.

- Distributed by default: Every new car has 50-100 AI-capable chips. Every smart camera. Every industrial sensor.

Where These Chips Are Actually Going

Forget ChatGPT for a second. Here's where edge AI is actually transforming industries right now:

Automotive: The Rolling Data Center

Modern EVs aren't just electric – they're distributed computing platforms on wheels. A single Tesla Model S has over 60 AI-capable processors handling:

- Battery management (predicting cell degradation before it happens)

- ADAS features (processing 8 camera feeds simultaneously)

- Cabin monitoring (detecting driver drowsiness in real-time)

- Predictive maintenance (analyzing vibration patterns to predict component failure)

The Rockchip RK3588 that nobody talks about? It's running facial recognition on multiple camera feeds WHILE managing vehicle systems. All on 8 watts.

Industrial IoT: The Invisible Revolution

Efficiency is such a huge component: ROHM downsized their AI circuit from 5 million gates to 20,000 – that's 0.4% of the original size – while maintaining full on-device learning capabilities.

What does this mean? Every motor, pump, and valve in a factory can now:

- Predict its own failure weeks in advance

- Optimize its own energy consumption

- Detect anomalies without cloud connectivity

- Learn new patterns WITHOUT retraining

We're not talking about one AI monitoring the factory. We're talking about thousands of tiny AIs, each specialized for their specific task, all running on batteries.

Healthcare: AI at the Bedside

Edge AI in healthcare isn't future talk – it's happening NOW:

- Continuous glucose monitors with predictive algorithms on-chip

- Smart stethoscopes diagnosing arrhythmias in real-time

- Wearables detecting seizures before they happen

- Pill cameras processing images as they travel through your GI tract

Syntiant's NDP200 enables always-on health monitoring that runs for months on a coin cell. Not hours. Not days. MONTHS.

Retail & Smart Cities: The Ubiquitous Intelligence Layer

Axelera's Metis chip processes 24 camera streams simultaneously at 400fps total throughput. One chip. Ten watts. This means:

- Every retail store can have real-time inventory tracking

- Traffic lights that actually understand traffic patterns

- Security systems that detect anomalies, not just motion

- Smart shelves that know when they need restocking

The Technical Revolution Nobody's Explaining

Here's what's actually revolutionary about these chips – and it's not what you think:

1. Heterogeneous Computing Is Finally Here

These aren't just scaled-down CPUs. The RK3588 combines:

- 8-core CPU (4× Cortex-A76 + 4× Cortex-A55)

- ARM Mali GPU

- 6 TOPS Neural Processing Unit

- Dedicated ISPs for camera processing

- Hardware video encoders/decoders

Everything on one chip, each component handling what it's best at. This is the distributed computing architecture we've been promising for decades, finally delivered at $30 per chip.

2. On-Device Learning (Not Just Inference)

Everyone talks about inference at the edge, but the real game-changer could be LEARNING at the edge. ROHM's chip can actually retrain its neural network based on local patterns. No cloud. No updates. It just... learns.

Imagine industrial equipment that gets BETTER at its job over time, without any human intervention. That's not sci-fi. That's shipping in products TODAY.

3. The Death of Latency

SiMa's MLSoC processes video streams with sub-millisecond latency. For context:

- Human reaction time: 250ms

- Cloud round-trip: 50-200ms

- Edge AI processing: <1ms

This isn't just "faster" - it enables entirely new categories of applications. Surgical robots. Autonomous vehicles. Real-time language translation. All impossible with cloud latency.

The Market Reality Check

Let's talk scale, because I don't think people understand how BIG this is:

- The number of edge/distributed companies dealing with making your data useful is exploding

- 10 billion edge AI devices expected by 2027

- $2.6 trillion in value creation from edge AI by 2030 (McKinsey estimate)

We're still in the first inning. Most of these chips cost less than your morning coffee, use less power than an LED bulb, and they're getting 2x better every 18 months.

Kinara's Ara-2 runs Llama2-7B at 6 watts. That's 200x less than a single H100. And it costs 1/1000th as much.

What This Actually Means for Software

Here's where it gets interesting for us software folks. The entire stack is changing:

Frameworks Are Fragmenting (In a Good Way)

- TensorFlow Lite for mobile/embedded

- Apache TVM for hardware optimization

- ONNX Runtime for cross-platform deployment

- Custom frameworks for specific chips (Hailo's Dataflow Compiler, etc.)

The days of "just use PyTorch" are OVER. Every chip has its own optimization path, and the differences in performance can be 10-100x.

New Programming Models

We're moving from "train once, deploy everywhere" to:

- Federated learning across edge devices

- Continuous on-device adaptation

- Hierarchical intelligence (edge → fog → cloud)

- Swarm intelligence patterns

This isn't your grandfather's client-server architecture. Hell, it's not even your older brother's microservices.

The Return of Hardware Knowledge

Remember when software engineers could ignore hardware? Those days are DONE. Understanding memory hierarchies, SIMD instructions, and hardware accelerators is now TABLE STAKES for AI deployment.

The good news? The tools are getting better. The Horizon Europe project is spending €100M+ just on making these chips easier to program.

But I'm All In On Cloud, What About Me?

The cloud-first AI approach is already obsolete for many use cases.

Why would you:

- Pay for bandwidth to upload video streams?

- Accept 100ms+ latency for real-time decisions?

- Risk privacy breaches from data transmission?

- Burn kilowatts when milliwatts will do?

The answer is: you wouldn't. And increasingly, you won't have to.

TIME naming Ambiq's platform one of 2025's best inventions isn't about the technology being flashy. It's about it being practical. These chips enable AI applications that simply weren't possible before – not because we couldn't build the models, but because we couldn't run them where they needed to run.

What Happens Next

We're about to see an explosion of AI applications that seemed impossible just two years ago:

- Truly smart homes that learn your patterns without sending data to Google

- Medical devices that provide specialist-level diagnosis at the point of care

- Industrial systems that optimize themselves continuously

- Autonomous systems that work reliably without connectivity

The bottleneck isn't compute anymore. It's not even power. It's imagination.

The companies that win the next decade won't be the ones with the biggest models or the most GPUs. They'll be the ones who figure out how to deploy intelligence EVERYWHERE, on chips that cost nothing and run forever.

So yeah, pay attention to the "boring" chips. Because boring is eating the world, one milliwatt at a time.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!