Two KubeCons, One Conference: While Everyone Demos AI Agents, Engineers Are Fighting With Syslogs

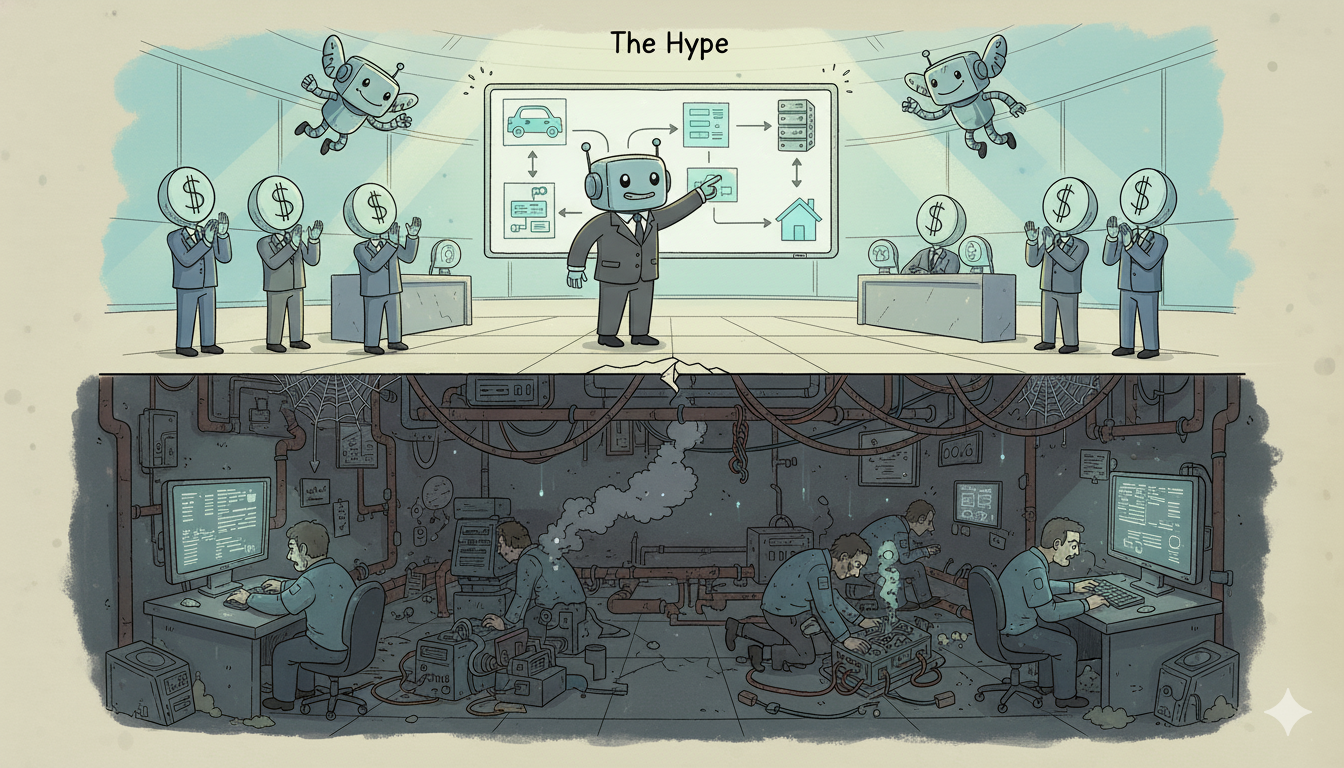

KubeCon North America 2025 was actually two different events happening simultaneously in the same building.

The first KubeCon lived on the exhibit floor. Every third booth featured some variation of "AI Agent" in the marketing. Autonomous operations. Self-healing infrastructure. Intelligent orchestration. The demos were slick. The pitch decks promised transformation. NVIDIA showcased Agent Blueprints. Google announced Agent-to-Agent protocols on GKE. Vendors competed to out-automate each other.

The second KubeCon happened in hallways, coffee lines, and after-hours conversations. This is where I spent most of my time. And not a single conversation was about AI agents.

Instead, many of the folks I talked to asked questions like:

- "How do you deserialize XML from legacy systems without choking your pipeline?"

- "We're collecting syslogs from 1,000 edge machines - what's your secret for not dropping lines?"

- "At 100 microservices running 100 metrics per second, how do you guarantee delivery?"

The gap between these two KubeCons reveals something important: the infrastructure we're selling isn't the infrastructure we need.

The Numbers That Don't Make Headlines

The AI agent hype is real and measurable. The global AI agent market is projected to grow from $5.1 billion in 2024 to $47.1 billion by 2030. Last year, 85% of enterprises claimed they'd deploy AI agents by 2025. Every major cloud provider now has an agent strategy.

The production reality is equally measurable, just less flattering. According to recent IBM research, "most organizations aren't agent-ready." SiliconANGLE reports that 2025 won't be "The Year of the Agent" because "fundamental groundwork is still missing." MIT studies suggest 95% of enterprise AI pilots fail to deliver expected returns.

What's the missing groundwork? Data quality and lineage. Integration and orchestration. The unglamorous stuff. The boring stuff.

The syslogs stuff.

The Math Nobody Wants to Do

Let me walk through the arithmetic that dominated my KubeCon conversations.

Consider a modest production environment: 100 microservices, each emitting 100 metrics per second. Standard observability setup.

That's 10,000 data points per second. 864 million per day. 315 billion per year.

And we're just getting started. Telemetry volume is climbing 35% annually. Average enterprises lost $12.9 million in 2024 due to undetected data errors. These aren't edge cases. They're the baseline.

Meanwhile, only 57% of companies even use distributed traces - a technology that's been "mature" for years. Only 7% have LLM observability in production.

The foundation isn't ready for AI agents because the foundation isn't ready for consistent data collection. We're discussing autonomous operations while struggling with reliable telemetry.

Why the Gap Exists

This disconnect is structural.

AI agents compete for labor budgets, which represent 60-70% of enterprise spending. Traditional software competes for IT budgets - maybe 2% of spending. That's a 30x difference in addressable market. Vendors aren't stupid. They're following the money.

The pitch they give is solid! "Replace expensive human labor with autonomous agents." The pitch for better syslog aggregation is... less compelling: "Stop losing data you didn't know you were losing." One gets funded. The other gets deferred.

Additionally, the problems themselves have different visibility profiles. When an AI agent completes a task, everyone notices. When a log line drops at 3 AM, nobody notices until the incident that missing line would have explained.

Conference booths optimize for what sells. Hallway conversations reveal what breaks.

The Iceberg Under Every Agent Demo

Every polished AI agent demo conceals an iceberg of prerequisites. Research suggests hidden costs exceed visible costs by 3-5x when you include:

- Data preparation. Agents need clean, consistent, well-structured inputs. Most enterprise data is "scattered, siloed, unclean and lacking consistent lineage," according to SiliconANGLE. Fixing that isn't a weekend project.

- Integration. For an agent to be useful, it must connect to multiple systems often the ones with XML interfaces, SOAP endpoints, and legacy protocols. That XML deserialization problem isn't theoretical. It's blocking your agent deployment.

- Reliability. Agents that occasionally hallucinate are annoying. Agents that occasionally hallucinate while missing critical log data are dangerous. You can't build autonomous operations on infrastructure that drops information.

- Governance. "Who's responsible if the agent gets it wrong?" is a question I heard repeatedly. Without clean audit trails and data lineage, the answer is legally complicated.

The companies I talked to that were succeeding with AI weren't the ones with the fanciest agents. They were the ones who'd invested years in boring infrastructure. McDonald's reportedly spent $60,000 PER LOCATION on data infrastructure - not on agents, but on the plumbing that would eventually make agents viable. (Just so you don't have to do the math - that's 44,000 locations = $2.64 BILLION (with a B)).

What Practitioners Actually Need

Based on dozens of conversations, here's the priority stack for working engineers:

1. Reliable ingestion at scale

Not "mostly reliable." Not "99.9% reliable." The kind of reliable where you can mathematically prove your loss rate. This means handling back-pressure correctly, managing network partitions gracefully, and failing loudly when something goes wrong.

2. Schema management across heterogeneous sources

The world isn't JSON-only. It's XML from 2008. It's binary protocols from industrial equipment. It's CSV exports from systems that predate REST. Every connector is a potential failure point.

3. Edge processing that doesn't require a PhD

Over 12,400 attendees at KubeCon Europe dealt with distributed systems daily. But processing data at the edge - before it hits the network - remains surprisingly hard. The tooling assumes you'll centralize first and process later.

4. Cost visibility before cost disaster

Cloud providers charge by the gigabyte. Log storage compounds exponentially. One team told me their observability costs exceeded their compute costs. They couldn't afford to monitor their systems.

None of these make good conference demos. All of them determine whether your production systems actually work.

The Real Opportunity

This isn't a doom-and-gloom story. The gap between hype and practice represents genuine opportunity.

Organizations that focus on data infrastructure now will be the ones whose AI agents actually function in 2027. While competitors chase demos, they'll build foundations. The 10% of AI agent projects that succeed aren't succeeding because of better models. They're succeeding because of better data pipelines.

The pattern is recognizable from Kubernetes itself. Back in 2015, everyone wanted to demo container orchestration. The teams that succeeded were the ones who first solved networking, storage, and security. The boring stuff. The prerequisite stuff.

We're watching the same movie with different actors. AI agents are the new container orchestration. Data infrastructure is the new networking-storage-security stack. You can't skip the prerequisites just because they're unglamorous.

Processing Data Where It Lives

The architecture that emerged from my KubeCon conversations wasn't revolutionary. It was practical.

Stop trying to centralize everything. Start processing data where it's generated. Filter at the edge. Enrich locally. Send only what matters.

Those 100 microservices at 100 metrics per second? Maybe 80% of those metrics are routine. Process them locally. Aggregate. Compress. Send summaries. Reserve bandwidth for the 20% that indicates actual problems.

That syslog collection from 1,000 machines? Don't ship raw logs to a central aggregator. Parse locally. Extract patterns. Forward anomalies. Keep detailed logs available for on-demand retrieval.

The XML deserialization problem? Handle it at the source. Transform to a common format before transmission. Pay the CPU cost at the edge where compute is cheap, not the bandwidth cost in transit where it's expensive.

This isn't exotic technology. It's distributed computing patterns applied to data infrastructure. The same patterns that made Kubernetes work are the patterns that make data pipelines work. Move computation to data, not data to computation.

Teams implementing this approach report dramatic improvements. Bandwidth costs drop 60-80%. Latency disappears because processing happens locally. Data quality improves because context never leaves the source.

A Conference I'd Actually Attend

Maybe next KubeCon needs a "Boring But Critical Infrastructure" track. I'd fill my schedule with sessions like:

- "XML Deserialization at 99.9999% Reliability: A Case Study"

- "We Stopped Dropping Log Lines: Our Two-Year Journey"

- "Syslog Collection at Scale: What Nobody Tells You"

- "The Month We Spent Debugging Our Metrics Pipeline"

No AI agents. No autonomous operations. No self-healing anything. Just engineers solving real problems with proven techniques.

The booths could showcase schema management tools. Log aggregation frameworks. Edge processing libraries. Connector ecosystems. The work that makes everything else possible.

Attendance might be lower. Marketing impact would definitely be smaller. But practitioners would leave with solutions to problems they actually have, rather than inspiration about problems they might someday encounter.

The Work That Matters

AI agents will eventually deliver on their promise. The models will improve. The orchestration will mature. The tooling will solidify. I'm genuinely optimistic about where this technology is heading.

But "eventually" requires groundwork. And that groundwork is decidedly unsexy. It's reliable data collection. It's consistent schema management. It's distributed processing. It's the infrastructure that doesn't fail at 3 AM.

The engineers fighting with syslogs aren't behind the curve. They're doing the prerequisite work. They're building the foundation that autonomous systems will require. They're solving the problems that booth demos skip over.

Next time you see an AI agent demo, ask a simple question: "What's your data loss rate?" The answer (or more likely, the blank stare) will tell you everything about whether that agent is ready for production.

The future belongs to AI agents. The present belongs to reliable data infrastructure. And you can't get to the future without doing the present's work first.

Those syslog problems aren't distracting you from the real work. They are the real work.

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!