You Can't Unbake a Cake (or Why Data Isolation in the Age of AI is BASICALLY Impossible)

Legislators across the globe want your data removed from AI models. The EU's "right to be forgotten" under GDPR Article 17, California's CCPA, a dozen other privacy frameworks—all built on the assumption that stored data works like filing cabinets. Find your folder, pull it out, done.

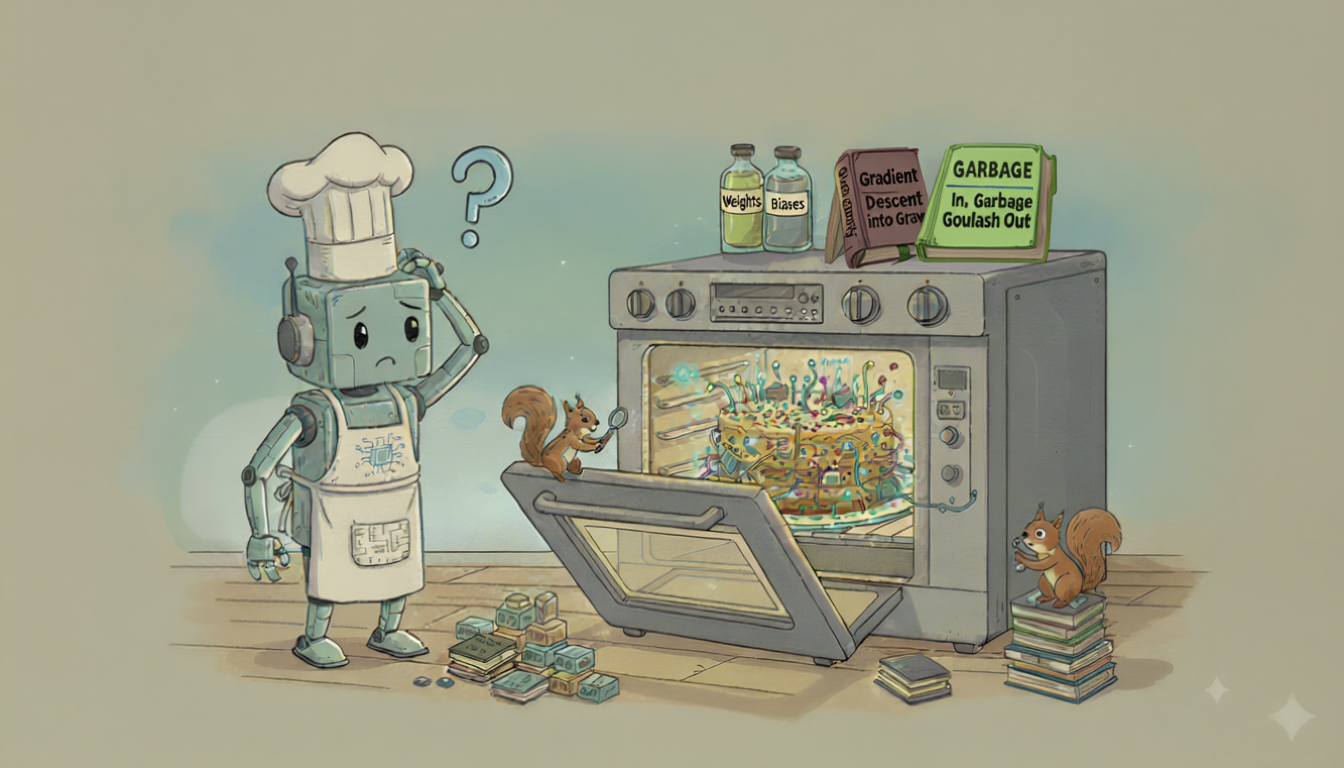

Except neural networks don't store data. They bake it into something fundamentally different.

Try extracting one cup of flour from a finished cake. That's what we're asking AI companies to do. And when you peel back the surface, that's effectively impossible.

Let's Start with the Recipe

A neural network is surprisingly simple at its core. Think of it as a recipe that teaches itself to cook.

You start with ingredients (input data), run them through a series of cooking steps (hidden layers), and get a finished dish (output). A network that recognizes handwritten digits takes pixel values as ingredients and produces "this is a 3" as the output.

The magic happens in the cooking steps. Each step transforms the data using two things: weights and biases. The math is pretty simple:

(input × weight) + bias = output

That's it. That's the entire operation happening at each connection in the network. Take some input, multiply it by a weight, add a bias, pass it forward. Do this millions or billions of times across multiple layers, and you get something that can recognize faces, translate languages, or write code.

Weights: The Recipe Ratios That Learn

Weights are like the ratios in a recipe—two cups flour to one cup sugar. But imagine a recipe that adjusts these ratios based on how the dish tastes.

When a network learns to recognize a handwritten "3":

Initial State: The network starts with random weights. Pure guesswork - like a chef randomly combining ingredients without a recipe. Maybe it uses a weight of 0.47 for detecting curves and 0.83 for detecting vertical lines. (Interestingly, the WAY you choose the randomness can affect your output too - crazy but true!)

Forward Pass: Your handwritten "3" flows through the network. Each pixel value gets multiplied by weights, biases get added, and the network spits out... "7". Wrong.

The Learning: The network measures how wrong it was (this is called the loss function - how far off was the cake from what you wanted?). Then it works backward through every single cooking step, asking: "Which ingredient ratio made this too salty?"

Backward Propagation: Every weight in the entire network gets adjusted. The curve detector might change from 0.47 to 0.4702. The vertical line detector goes from 0.83 to 0.8297. Thousands or millions of tiny adjustments across the whole system.

Do this 10,000 times with different handwritten digits, and eventually the ratios stabilize into something that works. The network has learned the recipe.

Biases: The Baseline Adjustments

Biases are simple - they're the "pinch of salt" that shifts the baseline. Without biases, if all your inputs are zero, you get zero output. That's useless for making decisions.

A bias is just a number added to each layer's calculation. It shifts the activation threshold - determining when a neuron decides "yes, I see a curve" versus "nope, that's a straight line." One bias per layer, adjusting the baseline for that cooking stage.

The math stays simple: (input × weight) + bias. But the bias makes sure you're not starting from absolute zero every time.

Your Data Goes In... And Disappears

Let's say you're training data. Maybe you're a 5'10" white man, who writes lots of emails about distributed systems. This information goes into a model as part of the training set.

A modern large language model has roughly 1.76 trillion parameters. That's 1,760,000,000,000 individual numbers, each one a decimal that looks like 0.4829374628.

When your data gets processed, every single one of those weights adjusts. Microscopically. Maybe one weight goes from 0.4829374628 to 0.4829374631. Another shifts from -0.2847362847 to -0.2847362843.

Your information - your height, your demographics, your writing patterns - is now distributed across 1.76 trillion decimal places. Each one changed by 0.00000000000347 because of you. And because of the 12 million other people in the training set. And because of the specific order the data was processed. And because of random initialization values. And because of thousands of other factors that all mixed together.

Which decimal place is specifically "you"?

The Sorites Paradox of AI Privacy

The ancient Greeks had a thought experiment: If you have a pile of sand and remove one grain, it's still a pile. Remove another grain, still a pile. But at some point, you don't have a pile anymore—you have scattered grains.

So when exactly does a pile stop being a pile?

This is the Sorites paradox, and it perfectly describes the data problem in neural networks. Your contribution is everywhere and nowhere. It's not stored as "David Aronchick's data in slot 47293." It's blended into the mathematical structure of 1.76 trillion weights, each one influenced by millions of training examples.

You can't point to any single weight and say "this one is mine." Because it's also everyone else's. And removing "your" contribution would require answering an unanswerable question: Which 0.00000000000003 adjustment came from you versus anyone else?

The Regulatory Impossibility

Now imagine a regulator showing up and saying: "Remove David's data from your model. He invoked his right to be forgotten."

The AI company has three options:

Option 1: Re-train from scratch without David's data.

Cost for a GPT-4 scale model: approximately $100 million in compute. Timeline: several months. Outcome: You get a completely different model because the training order changed. All existing API integrations break. Every customer gets degraded results because you trained on less data.

Option 2: Surgically remove David's contribution from each weight.

This requires:

- Tracking exactly when David's data influenced each of 1.76 trillion weights

- Calculating the precise mathematical contribution of his data versus everyone else's

- Reversing those specific adjustments without disrupting anything else

- Oh, and you needed to have recorded all of this during training (almost no one does)

Even if you had perfect records, the calculation is intractable. The interactions between weights aren't linear. Changing one creates cascading effects through the entire network. You'd need to simulate billions of counterfactual training scenarios to isolate David's contribution.

Option 3: Just... lie and say you did it.

Yeah, this is probably what happens. And especially since detecting that my name IS in there is just as hard as detecting it's NOT in there, it's basically unprovable. Winner?

Why This Matters Beyond Privacy Theater

The fundamental mismatch is between how we think about data storage and how neural networks actually work.

Privacy frameworks assume data works like a database: discrete records that can be queried, identified, and deleted. This made perfect sense in 2010. Databases are essentially fancy filing cabinets. Find the folder marked "David Aronchick," pull it out, shred it.

Neural networks are baking. The data is the flour, eggs, and sugar. The training process mixes everything together, applies heat (compute), and produces something new. The original ingredients aren't stored anywhere—they're transformed into structure. Into weights and biases that encode patterns across the entire training set.

Once you've baked the cake, the eggs don't exist anymore. They're part of the cake's structure now. You can't un-bake it.

The One-Way Door

This isn't a temporary technical limitation that better engineering will solve. It's architectural. The entire value proposition of neural networks is that they compress massive datasets into efficient mathematical structures. They're designed to be irreversible.

You couldn't make them reversible without fundamentally breaking what makes them useful. It would be like asking for a cake that tastes like cake but still contains intact eggs, unmixed flour, and separate sugar crystals. That's not a cake anymore—that's a pile of ingredients.

The efficiency comes from the irreversibility. The generalization comes from mixing patterns together. The entire point is to find commonalities across millions of examples and encode them into weights. Making this process reversible would destroy the learning itself.

But What About Differential Privacy?

Someone always brings up differential privacy as the solution. It sounds elegant: inject statistical noise into the training process so no individual's data can be extracted from the model.

The math is sophisticated. During training, you add carefully calibrated random noise to each gradient update. The model learns from patterns across thousands of examples while any single example gets obscured by the noise. In theory, even if someone could examine all 1.76 trillion weights, they couldn't reconstruct your specific data because it's been mixed with statistically equivalent but randomized information.

HOWEVER (and we're getting into the realm of philosophy here), without YOU that SPECIFIC noise pattern wouldn't exist. So when the model generates random noise value 0.00047382 for weight #847,293,847, it did not do it by cosmic accident. It generated that exact value because your data, combined with the differential privacy algorithm, produced that specific number. A different training example would have produced different noise. The noise is deterministic based on your input.

So is that noise "yours"? The model couldn't have arrived at that precise configuration without processing your data. Sure, the noise obscures what your original data was—you can't reverse-engineer "5'10" white man" from the noise. But that particular mathematical fingerprint exists solely because you were in the training set.

It's Sorites all over again. We've added a layer of statistical obfuscation, but the fundamental question remains: at what point does transformed data stop being "your" data? When it's encrypted? When it's hashed? When it's mixed with noise? When it's distributed across a trillion weights?

Differential privacy is genuinely useful for preventing the most egregious forms of data extraction. But it doesn't solve the philosophical problem of data ownership in transformed mathematical structures. It just makes the transformation more complex.

What About Federated Learning?

The other popular answer is federated learning: don't build one giant model with everyone's data. Instead, train many small models locally on individual devices or institutions, then aggregate only the weight updates.

Your phone trains a local model on your typing patterns, sends only the weight adjustments (not your actual messages) to a central server, and those adjustments get merged with millions of others. Your raw data never leaves your device. Problem solved, right?

Federated learning works remarkably well for specific use cases. Google uses it to improve Gboard's autocorrect without seeing your messages. Hospitals can collaborate on diagnostic models without sharing patient records. The privacy benefits are genuine. But, unfortunately, barring a very significant advancement, you'll also give up the ability to build truly powerful models.

The best AI systems today work precisely because they learn from massive, centralized datasets. GPT-4 didn't become useful by training on millions of disconnected small models. It trained on a enormous corpus where patterns across languages, domains, and contexts could intermingle. The model learned that "bank" in a financial context relates differently than "bank" next to "river" because it saw millions of examples in both contexts, simultaneously.

Federated learning fragments this. Each local model learns its narrow domain well. But the meta-patterns - the deep connections across disparate contexts - never form because the data never touches. You can aggregate weight updates, but you can't aggregate the emergent understanding that comes from seeing everything together.

The trade-off is tough, to say the least. Either privacy through fragmentation or capability through consolidation. Want a medical AI that recognizes rare disease patterns? You need data from millions of patients in one place, seeing correlations across demographics, geographies, and comorbidities. Federated learning gives you a thousand small models that each know their local population but miss the global patterns that make rare diseases recognizable.

Want a language model that truly understands context across topics? You need the entire knowledge graph in one training run, not fragmented pieces that never quite connect.

This also isn't a temporary limitation. It's a fundamental property of how neural networks extract patterns. The system that can see everything learns things that isolated systems cannot. Federated learning deliberately sacrifices that capability for privacy.

Maybe that's the right trade-off. Maybe we don't need hyper-capable general models and should prefer narrow, specialized systems. But let's be clear: we're choosing between privacy and performance. We don't get both.

So What Do We Actually Do?

I don't have an answer here. I'm not sure anyone does.

We're applying filing cabinet logic to baking problems. "Just delete the data" makes sense when data is stored discretely. It's nonsensical when data is transformed into distributed mathematical structure. Differential privacy obscures but doesn't eliminate the transformation. Federated learning avoids the problem by preventing the learning we actually want.

Maybe the answer is regulating the inputs; e.g., what data can be used for training in the first place. Maybe it's transparency requirements about training sets before the baking happens. Maybe it's completely different architectures that maintain some form of data provenance, even if that means accepting significant capability limits.

What won't work is pretending we can pull individual eggs out of finished cakes while maintaining the cake's structure. The math doesn't allow it. The architecture prevents it. And no amount of legislation will change that reality.

We need entirely new frameworks built around the actual properties of how these systems work. Frameworks that acknowledge the trade-offs: privacy versus capability, reversibility versus efficiency, local control versus global learning. Because right now, we're mandating the impossible and wondering why no one can comply.

The regulators want their data back. The engineers are holding a cake. And everyone's pretending those two things are compatible.

Onward!

Want to learn how intelligent data pipelines can reduce your AI costs? Check out Expanso. Or don't. Who am I to tell you what to do.*

NOTE: I'm currently writing a book based on what I have seen about the real-world challenges of data preparation for machine learning, focusing on operational, compliance, and cost. I'd love to hear your thoughts!